Artificial

Intelligence 3E

foundations of computational agents

11.5 Counterfactual Reasoning

The preceding analysis was for intervening before observing. The other case is observing then intervening. When the intervention is different from what actually happened, this is counterfactual reasoning, which is asking “what if something else were true?” Here we use a more general notion of counterfactual, where you can ask “what if were true?” without knowing whether were true.

Pearl [2009] created the following example.

Example 11.12.

Consider a case of a firing squad, where a captain can give an order to a number of shooters who can each shoot to kill. One reason for using a firing squad is that each shooter can think “I wasn’t responsible for killing the prisoner, because the prisoner would be dead even if I didn’t shoot.”

Suppose the captain has some probability of giving the order. The shooters each probabilistically obey the order to shoot or the order to not shoot. Assume they deviate from orders with low probabilities. The prisoner is dead if any of the shooters shoot.

One counterfactual is “if the second shooter shot, what would have happened if the second shooter had not shot?” The fact that the second shooter shot means that the order probably was given, and so the first shooter probably also shot, so the prisoner would probably be dead. The following analysis shows how to construct the probability distribution for the variables in the resulting situation.

Another counterfactual query is “if the prisoner died, what would have happened if the second shooter had not shot?” In a narrow reading, this might not be a counterfactual, as the second shooter might not have actually shot. However, this case is also covered below.

Counterfactual reasoning is useful when you need to assign blame. For example, in a self-driving car that has an accident, it might be useful to ask what would have happened if it had turned left instead of braking or if it had used its horn, when it sensed something on the road. If there is nothing it could have done, perhaps the accident was not its fault. Assigning blame is used in the law to provide penalties for those who make bad choices (e.g., would a death have occurred if they did not do some action), in the hope that they and others will make better decisions in the future.

Suppose you have a causal network. To model observing , and asking “what if ” consists of three steps:

-

1.

Determining what must be true for to be observed. This is an instance of abduction.

-

2.

Intervening to make true.

-

3.

Querying the resulting model, using the posterior probabilities from the first step.

This can be implemented by constructing an appropriate belief network, from which queries from the counterfactual situation can be made. The construction of the belief network for the counterfactual “what if ”, where is one of the values for variable , is as follows:

-

•

Represent the problem using a causal network, where conditional probabilities are in terms of a deterministic system with stochastic inputs, such as a probabilistic logic program or a probabilistic program.

-

•

Create a node with the same domain as but with no parents ( is called a primed variable below).

-

•

For each descendant of in the original model, create a node sharing the same parents and conditional probability as , apart from any descendant of , for which the primed version is used.

-

•

Condition on and condition on the observations of the initial situation using unprimed variables.

The primed variables are the variables for the counterfactual scenario. The non-primed variables are for the original scenario. Variables that are not a descendant of have no primed version and are the same in the original and the counterfactual scenario. This includes all probabilistic variables, because of the representation for conditional probabilities.

Any of the original variables can be conditioned on to give the initial situation, and any primed variables can be queried to answer queries about the counterfactual situation. Any belief network inference algorithm can be used to compute the posterior probabilities.

Example 11.13.

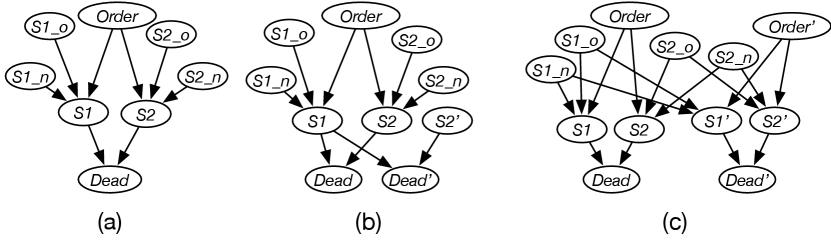

Figure 11.11(a) shows a model of a firing squad as in Example 11.12. The captain can give an order to two shooters who can shoot to kill; when one of them shoots, the prisoner dies. is true when the order is given to shoot. is true when shooter 1 shoots to kill. is true when shooter 1 would follow the order to shoot and is true when shooter 1 would shoot when the order is not to shoot. Thus, shooter 1 shoots when the order is given and is true or when the order is not given and is true:

where means , and similarly for other variables. The model for the second shooter is similar.

Figure 11.11(b) shows the network used when considering the alternative “what if shooter 2 did shoot?” or “what if shooter 2 did not shoot?” In this case there is a new variable that is true when shooter 2 shot in the alternative scenario. Everything in the alternative scenario is the same as in the initial scenario, except that the consequences of shooter 2 shooting might be different. In this case, the variable represents the proposition that the prisoner is dead in the second scenario.

The counterfactual “the second shooter shot; what is the probability that the prisoner would be dead if the second shooter did not shoot?” can be computed by querying

in the network of Figure 11.11(b).

The counterfactual “the prisoner is dead; what is the probability that the prisoner would be dead if the second shooter did not shoot?” can be computed by querying

in the network of Figure 11.11(b).

Figure 11.11(c) shows the counterfactual network for “what if the order was not given”. The counterfactual “shooter 1 didn’t shoot and the prisoner was dead; what is the probability the prisoner is dead if the order was not given?” can be answered with the query

in the network of Figure 11.11(c).