Artificial

Intelligence 3E

foundations of computational agents

2.1 Agents and Environments

An agent is something that acts in an environment. An agent can, for example, be a person, a robot, a dog, a worm, a lamp, a computer program that buys and sells, or a corporation.

Agents receive stimuli from their environment (Figure 1.4). Stimuli include light, sound, words typed on a keyboard, mouse movements, and physical bumps. The stimuli can also include information obtained from a webpage or from a database. Agents carry out actions that can affect the environment. Actions include steering, accelerating wheels, moving links of arms, speaking, displaying information, or sending a post command to a website.

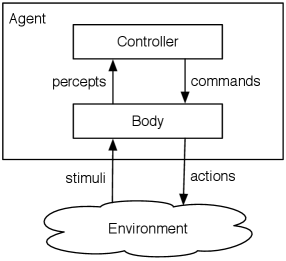

An agent is made up of a body and a controller. Agents interact with the environment with a body. The controller receives percepts from the body and sends commands to the body. See Figure 2.1.

A body includes:

-

•

sensors that convert stimuli into percepts

-

•

actuators, also called effectors, that convert commands into the actions in the environment.

A body can also carry out actions that don’t go through the controller, such as a stop button for a robot and reflexes of humans.

An embodied agent has a physical body. A robot is an artificial purposive embodied agent. Sometimes agents that act only in an information space are called robots or bots.

Common sensors for robots include touch sensors, cameras, infrared sensors, sonar, microphones, keyboards, mice, and XML readers used to extract information from webpages. As a prototypical sensor, a camera senses light coming into its lens and converts it into a two-dimensional array of intensity values called pixels. Sometimes multiple pixel arrays represent different colors or multiple cameras. Such pixel arrays could be the percepts for our controller. More often, percepts consist of higher-level features such as lines, edges, and depth information. Often the percepts are more specialized – for example, the positions of bright orange dots, the part of the display a student is looking at, or the hand signals given by a human. Sensors can be noisy, unreliable, or broken. Even when sensors are reliable there still may be ambiguity about the world given the sensor readings.

Commands include low-level commands such as to set the voltage of a motor to some particular value, and high-level specifications of the desired motion of a robot such as “stop” or “travel at 1 meter per second due east” or “go to room 103.” Actuators, like sensors, are typically noisy and often slow and unreliable. For example, stopping takes time; a robot, governed by the laws of physics, has momentum, and messages take time to travel. The robot may end up going only approximately 1 meter per second, approximately east, and both speed and direction may fluctuate. Traveling to a particular room may fail for a number of reasons.

2.1.1 Controllers

Agents are situated in time; they receive sensory data in time and do actions in time.

Let be the set of time points. Assume that is totally ordered. is discrete time if there are only a finite number of time points between any two time points; for example, there is a time point every hundredth of a second, or every day, or there may be time points whenever interesting events occur. Discrete time has the property that, for all times, except perhaps a last time, there is always a next time. Initially, assume that time is discrete and goes on forever. Assume as the next time after time . The time points do not need to be equally spaced. Assume that has a starting point, which is defined to be .

Suppose is the set of all possible percepts. A percept trace, or percept stream, is a function from into . It specifies which percept is received at each time.

Suppose is the set of all commands. A command trace is a function from into . It specifies the command for each time point.

Example 2.1.

Consider a household trading agent that monitors the price of some commodity, such as toilet paper, by checking for deals online, as well as how much the household has in stock. It must decide whether to order more and how much to order. Assume the percepts are the price and the amount in stock. The command is the number of units the agent decides to order (which is zero if the agent does not order any). A percept trace specifies for each time point (e.g., each day) the price at that time and the amount in stock at that time. A percept trace is given in Figure 2.2; at each time there is both a price and an amount in stock, here given as two graphs. A command trace specifies how much the agent decides to order at each time point. An example command trace is given in Figure 2.3.

The action of actually buying depends on the command but may be different. For example, the agent could issue a command to buy 12 rolls of paper at $2.10. This does not mean that the agent actually buys 12 rolls because there could be communication problems, the store could have run out of paper, or the price could change between deciding to buy and actually buying.

A percept trace for an agent is thus the sequence of all past, present, and future percepts received by the controller. A command trace is the sequence of all past, present, and future commands issued by the controller.

Because all agents are situated in time, an agent cannot actually observe full percept traces; at any time it has only experienced the part of the trace up to now. At time , an agent can only observe the value of the command and percept traces up to time , and its commands cannot depend on percepts after time .

The history of an agent at time is the agent’s percept trace for all times before or at time and its command trace before time .

A transduction is a function from the history of an agent at time to the command at time .

Thus a transduction is a function from percept traces to command traces that is causal in that the command at time depends only on percepts up to and including time .

A controller is an implementation of a transduction.

Note that this allows for the case where the agent can observe and act at the same time. This is useful when the time granularity is long enough. When time is measured finely enough, an agent may take time to react to percepts, in which case the action can be just a function of time before .

Example 2.2.

Continuing Example 2.1, a transduction specifies, for each time, how much of the commodity the agent should buy depending on the price history, the history of how much of the commodity is in stock (including the current price and amount in stock), and the past history of buying.

An example of a transduction is as follows: buy four dozen rolls if there are fewer than five dozen in stock and the price is less than 90% of the average price over the last 20 days; buy a dozen rolls if there are fewer than a dozen in stock; otherwise, do not buy any.

2.1.2 Belief States

Although a transduction is a function of an agent’s history, it cannot be directly implemented because an agent does not have direct access to its entire history. It has access only to its current percepts and what it has remembered.

The memory or belief state of an agent at time is all the information the agent has remembered from the previous times. An agent has access only to the part of the history that it has encoded in its belief state. Thus, the belief state encapsulates all of the information about its history that the agent can use for current and future commands. At any time, an agent has access to its belief state and its current percepts.

The belief state can contain any information, subject to the agent’s memory and processing limitations. This is a very general notion of belief.

Some instances of belief state include the following:

-

•

The belief state for an agent that is following a fixed sequence of instructions may be a program counter that records its current position in the sequence, or a list of actions still to carry out.

-

•

The belief state can contain specific facts that are useful – for example, where the delivery robot left a parcel when it went to find a key, or where it has already checked for the key. It may be useful for the agent to remember any information that it might need for the future that is reasonably stable and that cannot be immediately observed.

-

•

The belief state could encode a model or a partial model of the state of the world. An agent could maintain its best guess about the current state of the world or could have a probability distribution over possible world states; see Section 9.6.2.

-

•

The belief state could contain an estimate of how good each action is for each world state. This belief state, called the -function, is used extensively in decison-theoretic planning and reinforcement learning.

-

•

The belief state could be a representation of the dynamics of the world – how the world changes – and some of its recent percepts. Given its percepts, the agent could reason about what is true in the world.

-

•

The belief state could encode what the agent desires, the goals it still has to achieve, its beliefs about the state of the world, and its intentions, or the steps it intends to take to achieve its goals. These can be maintained as the agent acts and observes the world, for example, removing achieved goals and replacing intentions when more appropriate steps are found.

2.1.3 Agent Functions

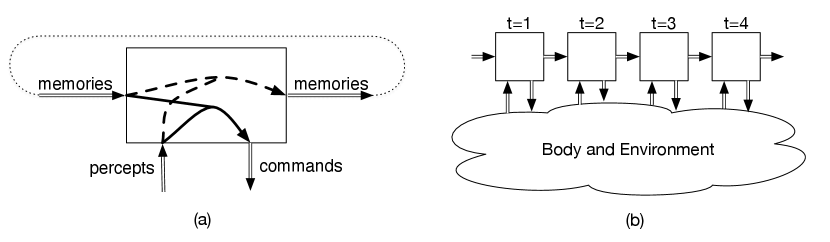

A controller maintains the agent’s belief state and determines what command to issue at each time. The information it has available when it must do this are its belief state and its current percepts. Figure 2.4 shows how the agent function acts in time; the memories output at one time are the memories input at the next time.

A belief state transition function for discrete time is a function

where is the set of belief states and is the set of possible percepts; means that is the belief state following belief state when is observed.

A command function is a function

where is the set of belief states, is the set of possible percepts, and is the set of possible commands; means that the controller issues command when the belief state is and when is observed.

The belief-state transition function and the command function together specify a controller for the agent. Note that a transduction is a function of the agent’s history, which the agent does not necessarily have access to, but a command function is a function of the agent’s belief state and percepts, which it does have access to.

Example 2.3.

To implement the transduction of Example 2.2, a controller can keep track of the rolling history of the prices for the previous 20 days and the average, using the variable , updated using

where is the new price and is the oldest price remembered. It can then discard . It must do something special for the first 20 days. See Section A.1 for an analysis of rolling averages.

A simpler controller could, instead of remembering a rolling history in order to maintain the average, remember just a rolling estimate of the average and use that value as a surrogate for the oldest item. The belief state can then contain one real number (), with the state transition function

This controller is much easier to implement and is not as sensitive to what happened exactly 20 time units ago. It does not actually compute the average, as it is biased towards recent data. This way of maintaining estimates of averages is the basis for temporal differences in reinforcement learning.

If there are a finite number of possible belief states, the controller is called a finite state controller or a finite state machine. A factored representation is one in which the belief states, percepts, or commands are defined by features. If there are a finite number of features, and each feature can only have a finite number of possible values, then the controller is a factored finite state machine. Richer controllers can be built using an unbounded number of values or an unbounded number of features. A controller that has an unbounded but countable number of states can compute anything that is computable by a Turing machine.