Third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including the full text).

8.3 Regression Planning

It is often more efficient to search in a different search space - one where the nodes are not states but rather are goals to be achieved. Once the problem has been transformed into a search problem, any of the algorithms of Chapter 3 can be used. We will only consider achievement goals and not maintenance goals; see Exercise 8.9.

Regression planning is searching in the graph defined by the following:

- The nodes are goals that must be achieved. A goal is a set of assignments to (some of) the features.

- The arcs correspond to actions. In particular, an arc from node g to g', labeled with action act, means act is the last action that is carried out before goal g is achieved, and the node g' is the goal that must be true immediately before act so that g is true immediately after act.

- The start node is the goal to be achieved. Here we assume it is a conjunction of assignments of values to features.

- The goal condition for the search, goal(g), is true if all of the elements of g are true of the initial state.

Given a node that represents goal g, a neighbor of g exists for every action act such that

- act is possible: it is possible for act to be carried out and for g to be true immediately after act; and

- act is useful: act achieves part of g.

The neighbor of g along the arc labeled with action act is the node g' defined by the weakest precondition. The weakest precondition for goal g to hold after action act is a goal g' such that

- g' is true before act implies that g is true immediately after act.

- g' is "weakest" in the sense that any proposition that satisfies the first condition must imply g'. This precludes, for example, having unnecessary conditions conjoined onto a precondition.

A set of assignments of values to variables is consistent if it assigns at most one value to any variable. That is, it is inconsistent if it assigns two different values to any variable.

Suppose goal g={X1=v1,...,Xn=vn} is the node being considered.

Consider computing the neighbors of a node given the feature-based representation of actions. An action act is useful if there is a causal rule that achieves Xi=vi for some i, using action act. The neighbor of this node along the arc labeled with action act is the proposition

precondition(act)∧body(X1=v1,act)∧...∧body(Xn=vn,act)

where body(Xi=vi,act) is the set of assignments of variables in the body of a rule that specifies when Xi=vi is true immediately after act. There is no such neighbor if there is no corresponding rule for some i, or if the proposition is inconsistent (i.e., assigns different values to a variable). Note that, if multiple rules are applicable for the same action, there will be multiple neighbors.

In terms of the STRIPS representation, act is useful for solving g if Xi=vi is an effect of action act, for some i. Action act is possible unless there is an effect Xj=vj of act and g contains Xj=vj' where vj' ≠vj. Immediately before act, the preconditions of act, as well as any Xk=vk not achieved by act, must hold. Thus, the neighbor of the goal g on an arc labeled with act is

precondition(act) ∪(g \ effects(act))}

as long as it is consistent.

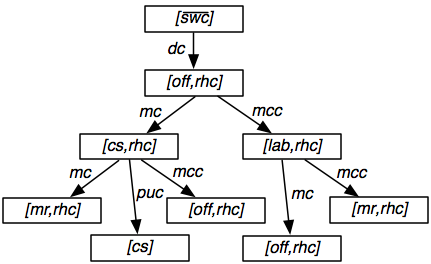

⟨[ ¬swc],[off,rhc]⟩ labeled with dc.

Consider the node [off,rhc]. There are two actions that can achieve off, namely mc from cs and mcc from lab. There is one action that can achieve rhc, namely puc. However, puc has as a precondition cs∧ ¬rhc, but cs and off are inconsistent (because they involve different assignments to the variable RLoc). Thus, puc is not a possible last action; it is not possible that, immediately after puc, the condition [off,rhc] holds.

Figure 8.3 shows the first two levels of the search space (without multipath pruning or loop detection). Note that the search space is the same no matter what the initial state is. The starting state has two roles, first as a stopping criterion and second as a source of heuristics.

The following example shows how a regression planner can recognize what the last action of a plan must be.

A problem with the regression planner is that a goal may not be achievable. Deciding whether a set of goals is achievable is often difficult to infer from the definitions of the actions. For example, you may be required to know that an object cannot be at two different places at the same time; sometimes this is not explicitly represented and is only implicit in the effects of an action, and the fact that the object is only in one position initially. To perform consistency pruning, the regression planner can use domain knowledge to prune the search space.

Loop detection and multiple-path pruning may be incorporated into a regression planner. The regression planner does not have to visit exactly the same node to prune the search. If the goal represented by a node n implies a goal on the path to n, node n can be pruned. Similarly, for multiple-path pruning, see Exercise 8.11.

A regression planner commits to a particular total ordering of actions, even if no particular reason exists for one ordering over another. This commitment to a total ordering tends to increase the complexity of the search space if the actions do not interact much. For example, it tests each permutation of a sequence of actions when it may be possible to show that no ordering succeeds.