Third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including the full text).

8.2 Forward Planning

A deterministic plan is a sequence of actions to achieve a goal from a given starting state. A deterministic planner is a problem solver that can produce a plan. The input to a planner is an initial world description, a specification of the actions available to the agent, and a goal description. The specification of the actions includes their preconditions and their effects.

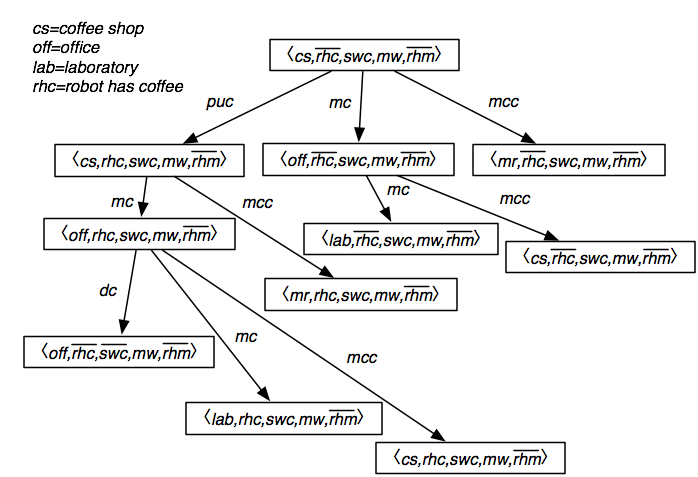

One of the simplest planning strategies is to treat the planning problem as a path planning problem in the state-space graph. In a state-space graph, nodes are states, and arcs correspond to actions from one state to another. The arcs coming out of a state s correspond to all of the legal actions that can be carried out in that state. That is, for each state s, there is an arc for each action a whose precondition holds in state s, and where the resulting state does not violate a maintenance goal. A plan is a path from the initial state to a state that satisfies the achievement goal.

A forward planner searches the state-space graph from the initial state looking for a state that satisfies a goal description. It can use any of the search strategies described in Chapter 3.

Using a forward planner is not the same as making an explicit state-based representation of the actions, because the relevant part of the graph can be created dynamically from the representations of the actions.

A complete search strategy, such as A* with multiple-path pruning or iterative deepening, is guaranteed to find a solution. The complexity of the search space is defined by the forward branching factor of the graph. The branching factor is the set of all possible actions at any state, which may be quite large. For the simple robot delivery domain, the branching factor is 3 for the initial situation and is up to 4 for other situations. When the domain becomes bigger, the branching factor increases and so the search space explodes. This complexity may be reduced by finding good heuristics [see Exercise 8.6], but the heuristics have to be very good to overcome the combinatorial explosion.

A state can be represented as either

- a complete world description, in terms of an assignment of a value to each primitive proposition or as a proposition that defines the state, or

- a path from an initial state; that is, by the sequence of actions that were used to reach that state from the initial state. In this case, what holds in a state can be deduced from the axioms that specify the effects of actions.

The difference between representations (a) and (b) amounts to the difference between computing a whole new world description for each world created, or by calculating what holds in a world as necessary. Alternative (b) may save on space (particularly if there is a complex world description) and will allow faster creation of a new node, but it will be slower to determine what actually holds in any given world. Another difficulty with option (b) is that determining whether two states are the same (e.g., for loop detection or multiple-path pruning) is expensive.

We have presented state-space searching as a forward search method, but it is also possible to search backward from the set of states that satisfy the goal. Whereas the initial state is usually fully specified and so the frontier starts off containing a single state, the goal does not usually fully specify a state and so there would be many goal states that satisfy the goal. This would mean that the frontier is initially very large. Thus, backward search in the state space is often not practical.