Third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including the full text).

2.2.1 The Agent Function

Agents are situated in time: they receive sensory data in time and do actions in time. The action that an agent does at a particular time is a function of its inputs. We first consider the notion of time.

Let T be the set of time points. Assume that T is totally ordered and has some metric that can be used to measure the temporal distance between any two time points. Basically, we assume that T can be mapped to some subset of the real line.

T is discrete if there exist only a finite number of time points between any two time points; for example, there is a time point every hundredth of a second, or every day, or there may be time points whenever interesting events occur. T is dense if there is always another time point between any two time points; this implies there must be infinitely many time points between any two points. Discrete time has the property that, for all times, except perhaps a last time, there is always a next time. Dense time does not have a "next time." Initially, we assume that time is discrete and goes on forever. Thus, for each time there is a next time. We write t+1 to be the next time after time t; it does not mean that the time points are equally spaced. Assume that T has a starting point, which we arbitrarily call 0.

Suppose P is the set of all possible percepts. A percept trace, or percept stream, is a function from T into P. It specifies what is observed at each time.

Suppose C is the set of all commands. A command trace is a function from T into C. It specifies the command for each time point.

The action of actually buying depends on the command but may be different. For example, the agent could issue a command to buy 12 rolls of toilet paper at a particular price. This does not mean that the agent actually buys 12 rolls because there could be communication problems, the store could have run out of toilet paper, or the price could change between deciding to buy and actually buying.

A percept trace for an agent is thus the sequence of all past, present, and future percepts received by the controller. A command trace is the sequence of all past, present, and future commands issued by the controller. The commands can be a function of the history of percepts. This gives rise to the concept of a transduction, a function that maps percept traces into command traces.

Because all agents are situated in time, an agent cannot actually observe full percept traces; at any time it has only experienced the part of the trace up to now. It can only observe the value of the trace at time t∈T when it gets to time t. Its command can only depend on what it has experienced.

A transduction is causal if, for all times t, the command at time t depends only on percepts up to and including time t. The causality restriction is needed because agents are situated in time; their command at time t cannot depend on percepts after time t. A controller is an implementation of a causal transduction.

The history of an agent at time t is the percept trace of the agent for all times before or at time t and the command trace of the agent before time t.

Thus, a causal transduction specifies a function from the agent's history at time t into the command at time t. It can be seen as the most general specification of an agent.

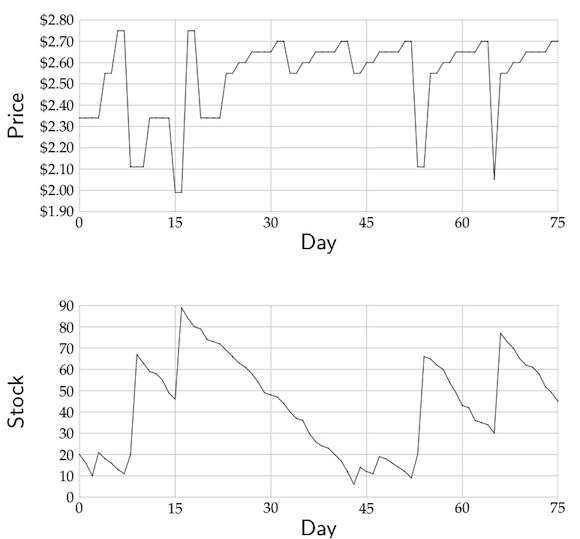

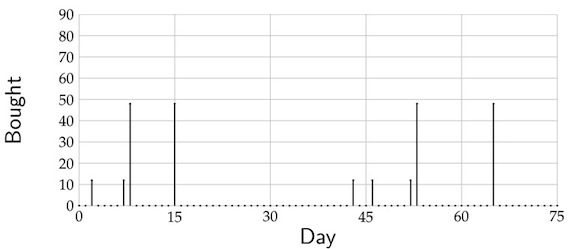

An example of a causal transduction is as follows: buy four dozen rolls if there are fewer than five dozen in stock and the price is less than 90% of the average price over the last 20 days; buy a dozen more rolls if there are fewer than a dozen in stock; otherwise, do not buy any.

Although a causal transduction is a function of an agent's history, it cannot be directly implemented because an agent does not have direct access to its entire history. It has access only to its current percepts and what it has remembered.

The belief state of an agent at time t is all of the information the agent has remembered from the previous times. An agent has access only to its history that it has encoded in its belief state. Thus, the belief state encapsulates all of the information about its history that the agent can use for current and future commands. At any time, an agent has access to its belief state and its percepts.

The belief state can contain any information, subject to the agent's memory and processing limitations. This is a very general notion of belief; sometimes we use a more specific notion of belief, such as the agent's belief about what is true in the world, the agent's beliefs about the dynamics of the environment, or the agent's belief about what it will do in the future.

Some instances of belief state include the following:

- The belief state for an agent that is following a fixed sequence of instructions may be a program counter that records its current position in the sequence.

- The belief state can contain specific facts that are useful - for example, where the delivery robot left the parcel in order to go and get the key, or where it has already checked for the key. It may be useful for the agent to remember anything that is reasonably stable and that cannot be immediately observed.

- The belief state could encode a model or a partial model of the state of the world. An agent could maintain its best guess about the current state of the world or could have a probability distribution over possible world states; see Section 5.6 and Chapter 6.

- The belief state could be a representation of the dynamics of the world and the meaning of its percepts, and the agent could use its perception to determine what is true in the world.

- The belief state could encode what the agent desires, the goals it still has to achieve, its beliefs about the state of the world, and its intentions, or the steps it intends to take to achieve its goals. These can be maintained as the agent acts and observes the world, for example, removing achieved goals and replacing intentions when more appropriate steps are found.

A controller must maintain the agent's belief state and determine what command to issue at each time. The information it has available when it must do this includes its belief state and its current percepts.

A belief state transition function for discrete time is a function

remember:S×P →S

where S is the set of belief states and P is the set of possible percepts; st+1=remember(st,pt) means that st+1 is the belief state following belief state st when pt is observed.

A command function is a function

do:S×P →C

where S is the set of belief states, P is the set of possible percepts, and C is the set of possible commands; ct=do(st,pt) means that the controller issues command ct when the belief state is st and when pt is observed.

The belief-state transition function and the command function together specify a causal transduction for the agent. Note that a causal transduction is a function of the agent's history, which the agent doesn't necessarily have access to, but a command function is a function of the agent's belief state and percepts, which it does have access to.

ave ←ave + (new-old)/(20)

where new is the new price and old is the oldest price remembered. It can then discard old. It must do something special for the first 20 days.

A simpler controller could, instead of remembering a rolling history in order to maintain the average, remember just the average and use the average as a surrogate for the oldest item. The belief state can then contain one real number (ave). The state transition function to update the average could be

ave ←ave + (new-ave)/(20)

This controller is much easier to implement and is not sensitive to what happened 20 time units ago. This way of maintaining estimates of averages is the basis for temporal differences in reinforcement learning.

If there exists a finite number of possible belief states, the controller is called a finite state controller or a finite state machine. A factored representation is one in which the belief states, percepts, or commands are defined by features. If there exists a finite number of features, and each feature can only have a finite number of possible values, the controller is a factored finite state machine. Richer controllers can be built using an unbounded number of values or an unbounded number of features. A controller that has countably many states can compute anything that is computable by a Turing machine.