Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

10.1.1 Learning Probabilities

The above formulation of probabilities of models can be used to learn probabilities, as in the following example.

Example 10.1.

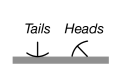

Consider the problem of predicting the next toss of a thumbtack (drawing pin), where we define the outcomes and as follows:

Suppose you tossed a thumbtack a number of times and observed , a particular sequence of instances of and instances of . Assume the tosses are independent, and that occurs with probability . The likelihood is

This is a maximum when the log-likelihood

is a maximum, which occurs when .

Note that if or , this predicts a zero probability for an event that is possible.

The maximum likelihood estimator on the training data is not necessarily the best predictor on the test set.

A simple way both to solve the zero-probability problem and to take prior knowledge into account is to use a real-valued pseudocount or prior count to which the training data are added.

Suppose an agent must predict a value for with domain , and there are no inputs. The agent starts with a pseudocount for each . These counts are chosen before the agent has seen any of the data. Suppose the agent observes some training examples, where is the number of data points with . The probability of is estimated using

This may be used to estimate probabilities even before any data have been seen, when the are all 0.

If there is no prior knowledge, Laplace [1812] suggested that it is reasonable to set . This method with a prior count of 1 is called Laplace smoothing. Laplace smoothing can be justified in terms of averaging over the probabilities [see Section 10.4].

Example 10.2.

In Example 10.1, one possible prior for the model that says that heads occurs with probability is

in which case the probability of the model given examples which consists of a particular sequence of tails and heads is

In this case, the MAP estimate for , the probability of heads is:

One reason for this prior is that you might not think that 0 or 1 is a reasonable estimate for the probability of heads (no matter how much data you have). It also reflects prior knowledge to be used when there are no data, and can be integrated with data. Moreover this prior has the same form as the posterior; both are described in terms of counts (a prior that has the same form as a posterior is called a conjugate prior).

To determine appropriate pseudocounts, consider the question, “How much more should an agent believe if it had seen one example with true than if it had seen no examples with true?” If, with no examples of true, the agent believes that is impossible, should be zero. If not, the ratio chosen in answer to that question should be equal to the ratio . If the pseudocount is 1, a value that has been seen once would be twice as likely as one that has been seen no times. If the pseudocount is 10, a value observed once would be 10% more likely than a value observed no times. If the pseudocount is 0.1, a value observed once would be 11 times more likely than a value observed no times. If there is no reason to choose one value in the domain of over another, all the values of should be equal.

Typically, we do not have data without any prior knowledge. There is often a great deal of knowledge about a domain, either in the meaning of the symbols or in experience with similar examples, that can be used to improve predictions.

Probabilities from Experts

The use of pseudocounts also gives us a way to combine expert opinion and data. Often a single agent does not have good data but may have access to multiple experts who have varying levels of expertise and who give different probabilities.

There are a number of problems with obtaining probabilities from experts:

-

•

experts’ reluctance to give an exact probability value that cannot be refined,

-

•

representing the uncertainty of a probability estimate,

-

•

combining the numbers from multiple experts, and

-

•

combining expert opinion with actual data.

Rather than expecting experts to give probabilities, the experts provide counts. Instead of giving a real number for the probability of , an expert gives a pair of numbers as that is interpreted as though the expert had observed occurrences of out of trials. Essentially, the experts provide not only a probability but also an estimate of the size of the data set on which their opinion is based. Note that you should not necessarily believe an expert’s sample size, as people are often overconfident in their abilities.

The counts from different experts can be combined together by adding the components to give the pseudocounts for the system. Whereas the ratio reflects the probability, different levels of confidence are reflected in the absolute values. Consider different ways to represent the probability . The pair reflects extremely low confidence that would quickly be dominated by data or other experts’ estimates. The pair reflects more confidence – a few examples would not change it much, but tens of examples would. Even hundreds of examples would have little effect on the prior counts of the pair . However, with millions of data points, even these prior counts would have little impact on the resulting probability estimate.