Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

9.1.1 Axioms for Rationality

An agent chooses actions based on their outcomes. Outcomes are whatever the agent has preferences over. If an agent does not prefer any outcome to any other outcome, it does not matter what the agent does. Initially, we consider outcomes without considering the associated actions. Assume there are only a finite number of outcomes.

We define a preference relation over outcomes. Suppose and are outcomes. We say that is weakly preferred to outcome , written , if outcome is at least as desirable as outcome .

Define to mean and . That is, means outcomes and are equally preferred. In this case, we say that the agent is indifferent between and .

Define to mean and . That is, the agent weakly prefers outcome to outcome , but does not weakly prefer to , and is not indifferent between them. In this case, we say that is strictly preferred to outcome .

Typically, an agent does not know the outcome of its actions. A lottery is defined to be a finite distribution over outcomes, written as

where each is an outcome and is a non-negative real number such that

The lottery specifies that outcome occurs with probability . In all that follows, assume that outcomes may include lotteries. This includes lotteries where the outcomes are also lotteries, and so on recursively (called lotteries over lotteries).

Axiom 9.1.

(Completeness) An agent has preferences between all pairs of outcomes:

The rationale for this axiom is that an agent must act; if the actions available to it have outcomes and then, by acting, it is explicitly or implicitly preferring one outcome over the other.

Axiom 9.2.

(Transitivity) Preferences must be transitive:

To see why this is reasonable, suppose it is false, in which case and and . Because is strictly preferred to , the agent should be prepared to pay some amount to get from to . Suppose the agent has outcome ; then is at least as good so the agent would just as soon have . is at least as good as so the agent would just as soon have as . Once the agent has it is again prepared to pay to get to . It has gone through a cycle of preferences and paid money to end up where it is. This cycle that involves paying money to go through it is known as a money pump because, by going through the loop enough times, the amount of money that agent must pay can exceed any finite amount. It seems reasonable to claim that being prepared to pay money to cycle through a set of outcomes is irrational; hence, a rational agent should have transitive preferences.

It follows from the transitivity and completeness axioms that transitivity holds for mixes of and , so that if one or both of the preferences in the premise of the transitivity axiom is strict, then the conclusion is strict. That is, . Also, . See Exercise 1.

Axiom 9.3.

(Monotonicity) An agent prefers a larger chance of getting a better outcome than a smaller chance of getting the better outcome. That is, if and then

Note that, in this axiom, between outcomes represents the agent’s preference, whereas between and represents the familiar comparison between numbers.

The following axiom specifies that lotteries over lotteries only depend the outcomes and probabilities:

Axiom 9.4.

(Decomposability) (“no fun in gambling”) An agent is indifferent between lotteries that have the same probabilities over the same outcomes, even if one or both is a lottery over lotteries. For example:

Also for any outcomes and .

This axiom specifies that it is only the outcomes and their probabilities that define a lottery. If an agent had a preference for gambling, that would be part of the outcome space.

These four axioms imply some structure on the preference between outcomes and lotteries. Suppose that and . Consider whether the agent would prefer

-

•

or

-

•

the lottery

for different values of . When , the agent prefers the lottery (because, by decomposability, the lottery is equivalent to and ). When , the agent prefers (because the lottery is equivalent to and ). At some stage, as is varied, the agent’s preferences flip between preferring and preferring the lottery.

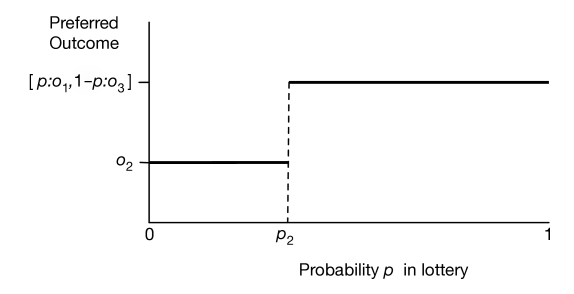

Figure 9.1 shows how the preferences must flip as is varied. On the -axis is and the -axis shows which of or the lottery is preferred. The following proposition formalizes this intuition.

Proposition 9.1.

If an agent’s preferences are complete, transitive, and follow the monotonicity axiom, and if and , there exists a number such that and

-

•

for all , the agent prefers to the lottery (i.e., ) and

-

•

for all , the agent prefers the lottery (i.e., ).

Proof.

By monotonicity and transitivity, if for any then, for all , . Similarly, if for any then, for all , . By completeness, for each value of , either , or . If there is some such that , then the theorem holds. Otherwise, a preference for either or the lottery with parameter implies preferences for either all values greater than or for all values less than . By repeatedly subdividing the region that we do not know the preferences for, we will approach, in the limit, a value filling the criteria for . ∎

The preceding proposition does not specify what the preference of the agent is at the point . The following axiom specifies that the agent is indifferent at this point.

Axiom 9.5.

(Continuity) Suppose and , then there exists a such that

The next axiom specifies that replacing an outcome in a lottery with an outcome that is not worse, cannot make the lottery worse.

Axiom 9.6.

(Substitutability) If then the agent weakly prefers lotteries that contain instead of , everything else being equal. That is, for any number and outcome :

A direct corollary of this is that outcomes to which the agent is indifferent can be substituted for one another, without changing the preferences:

Proposition 9.2.

If an agent obeys the substitutability axiom and then the agent is indifferent between lotteries that only differ by and . That is, for any number and outcome the following indifference relation holds:

This follows because is equivalent to and , and we can use substitutability for both cases.

An agent is defined to be rational if it obeys the completeness, transitivity, monotonicity, decomposability, continuity, and substitutability axioms.

It is up to you to determine if this technical definition of rationality matches your intuitive notion of rationality. In the rest of this section, we show more consequences of this definition.

Although preferences may seem to be complicated, the following theorem shows that a rational agent’s value for an outcome can be measured by a real number. Those value measurements can be combined with probabilities so that preferences with uncertainty can be compared using expectation. This is surprising for two reasons:

-

•

It may seem that preferences are too multifaceted to be modeled by a single number. For example, although one may try to measure preferences in terms of dollars, not everything is for sale or easily converted into dollars and cents.

-

•

One might not expect that values could be combined with probabilities. An agent that is indifferent between the money and the lottery for all monetary values and and for all is known as an expected monetary value (EMV) agent. Most people are not EMV agents, because they have, for example, a strict preference between $1,000,000 and the lottery . (Think about whether you would prefer a million dollars or a coin toss where you would get nothing if the coin lands heads or two million if the coin lands tails.) Money cannot be simply combined with probabilities, so it may be surprising that there is a value that can be.

Proposition 9.3.

If an agent is rational, then for every outcome there is a real number , called the utility of , such that

-

•

if and only if and

-

•

utilities are linear with probabilities:

Proof.

If the agent has no strict preferences (i.e., the agent is indifferent between all outcomes) then define for all outcomes .

Otherwise, choose the best outcome, , and the worst outcome, , and define, for any outcome , the utility of to be the value such that

The first part of the proposition follows from substitutability and monotonicity.

To prove the second part, any lottery can be reduced to a single lottery between and by replacing each by its equivalent lottery between and , and using decomposability to put it in the form , with equal to . The details are left as an exercise. ∎

In this proof the utilities are all in the range , but any linear scaling gives the same result. Sometimes is a good scale to distinguish it from probabilities, and sometimes negative numbers are useful to use when the outcomes have costs. In general, a program should accept any scale that is intuitive to the user.

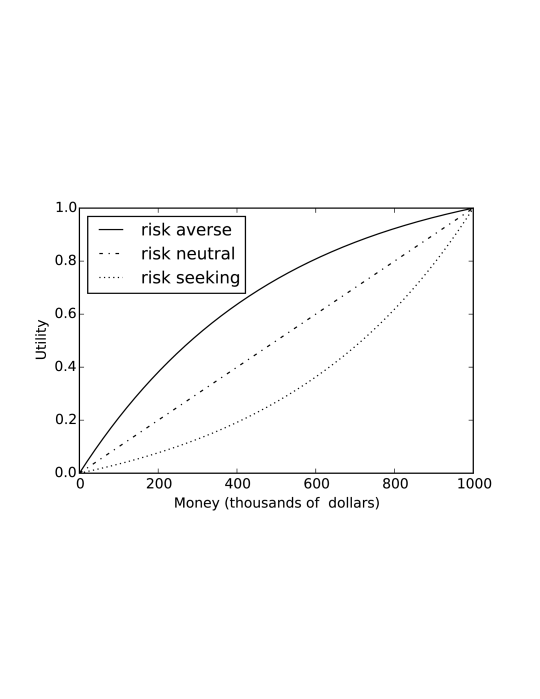

A linear relationship does not usually exist between money and utility, even when the outcomes have a monetary value. People often are risk averse when it comes to money. They would rather have in their hand than some randomized setup where they expect to receive but could possibly receive more or less.

Example 9.1.

Figure 9.2 shows a possible money–utility relationship for three agents. The topmost agent is risk averse, with a concave utility function. The agent with a straight-line plot is risk neutral. The lowest agent with a convex utility function is risk seeking.

The risk averse agent would rather have $300,000 than a 50% chance of getting either nothing or $1,000,000, but would prefer the gamble on the million dollars to $275,000. They would also require more than a 73% chance of winning a million dollars to prefer this gamble to half a million dollars.

For the risk-averse agent, . Thus, given this utility function, the risk-averse agent would be willing to pay $1000 to eliminate a chance of losing all of their money. This is why insurance companies exist. By paying the insurance company, say $600, the risk-averse agent can change the lottery that is worth $999,000 to them into one worth $1,000,000 and the insurance companies expect to pay out, on average, about $300, and so expect to make $300. The insurance company can get its expected value by insuring enough houses. It is good for both parties.

Rationality does not impose any conditions on what the utility function looks like.

Example 9.2.

Figure 9.3 shows a possible money–utility relationship for Chris who really wants a toy worth , but would also like one worth , and would like both even better. Apart from these, money does not matter much to Chris. Chris is prepared to take risks. For example, if Chris had , Chris would be very happy to bet against a single dollar of another agent on a fair bet, such as a coin toss. This is reasonable because that $9 is not much use to Chris, but the extra dollar would enable Chris to buy the toy. Chris does not want more than , because then Chris will worry about it being lost or stolen this will leave Chris open to extortion (e.g., by a sibling).

Challenges to Expected Utility

There have been a number of challenges to the theory of expected utility. The Allais Paradox, presented in 1953 [Allais and Hagen, 1979], is as follows. Which would you prefer of the following two alternatives?

- A:

-

– one million dollars

- B:

-

lottery

Similarly, what would you choose between the following two alternatives?

- C:

-

lottery

- D:

-

lottery

It turns out that many people prefer to , and prefer to . This choice is inconsistent with the axioms of rationality. To see why, both choices can be put in the same form:

- A,C:

-

lottery

- B,D:

-

lottery

In and , is a million dollars. In and , is zero dollars. Concentrating just on the parts of the alternatives that are different seems intuitive, but people seem to have a preference for certainty.

Tversky and Kahneman [1974], in a series of human experiments, showed how people systematically deviate from utility theory. One such deviation is the framing effect of a problem’s presentation. Consider the following.

-

•

A disease is expected to kill 600 people. Two alternative programs have been proposed:

- Program A:

-

200 people will be saved

- Program B:

-

with probability 1/3, 600 people will be saved, and with probability 2/3, no one will be saved

Which program would you favor?

-

•

A disease is expected to kill 600 people. Two alternative programs have been proposed:

- Program C:

-

400 people will die

- Program D:

-

with probability 1/3 no one will die, and with probability 2/3 600 will die

Which program would you favor?

Tversky and Kahneman showed that 72% of people in their experiments chose A over B, and 22% chose C over D. However, these are exactly the same choice, just described in a different way.

Prospect theory, developed by Kahneman and Tversky, is an alternative to expected utility that better fits human behavior.