Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

9.1.3 Prospect Theory

Utility theory is a normative theory of rational agents that is justified by a set of axioms. Prospect theory is a descriptive theory of people that seeks to describe how humans make decisions. A descriptive theory is evaluated making observations of human behavior and by carrying out controlled psychology experiments.

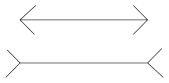

Rather than having preferences over outcomes, prospect theory considers the context of the preferences. The idea that humans do not perceive absolute values, but values in context is well established in psychology. Consider the Müller-Lyer illusion shown in Figure 9.4.

The horizontal lines are of equal length, but in the context of the other lines, they appear to be different. As another example, if you have one hand in cold water and one in hot water, and then put both into warm water, the warm water will feel very different to each hand. People’s preferences also depend on context. Prospect theory is based on the observation that it is not the outcomes that people have preferences over; what matters is how much the choice differs from the current situation.

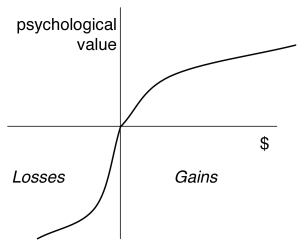

The relationship between money and value that is predicted by prospect theory is shown in Figure 9.5.

Rather than having the absolute wealth on the -axis, this graph shows the difference from the current wealth. The origin of the -axis corresponds to the current state of the person’s wealth. This position is called the reference point. Prospect theory predicts:

-

•

For gains, people are risk averse. This can be seen as the curve above the current wealth is concave.

-

•

For losses, people are risk seeking. This can be seen as the curve below the current wealth is convex.

-

•

Losses are approximately twice as bad as gains. The slope for losses is steeper than gains.

It is not just money that has such a relationship, but anything that has value. Prospect theory makes different predictions about how humans will act than does utility theory, as in the following examples from Kahneman [2011, pages 275, 291].

Example 9.4.

Consider Anthony and Betty:

-

•

Anthony’s current wealth is $1 million.

-

•

Betty’s current wealth is $4 million.

They are both offered the choice between a gamble and a sure thing:

-

•

Gamble: equal chance to end up owning $1 million or $4 million.

-

•

Sure Thing: own $2 million

Utility theory predicts that, assuming they have the same utility curve, Anthony and Betty will make the same choice, as the outcomes are identical. Utility theory does not take into account the current wealth. Prospect theory makes different predictions for Anthony and Betty. Anthony is making a gain and so will be risk averse, and so will probably go with the sure thing. Betty is making a loss, and so will be risk seeking and go with the gamble. Anthony will be happy with the $2 million, and does not want to risk being unhappy. Betty will be unhappy with the $2 million, and has a chance to be happy if she takes the gamble.

Example 9.5.

Twins Andy and Bobbie, have identical tastes and identical starting jobs. There are two jobs that are identical, except that

-

•

job gives a raise of $10000

-

•

job gives an extra day of vacation per month.

They are each indifferent to the outcomes and toss a coin. Andy takes job A, and Bobbie takes job B.

Now the company suggests they swap jobs with a $500 bonus.

Utility theory predicts that they will swap. They were indifferent and now can be $500 better off by swapping.

Prospect theory predicts they will not swap jobs. Given they have taken their jobs, they now have different reference points. Andy thinks about losing $10000. Bobbie thinks about losing 12 days of holiday. The loss is much worse than the gain of the $500 plus the vacation or salary. They each prefer their own job.

Empirical evidence supports the hypothesis that prospect theory is better than utility theory in predicting human decisions. However, just because it better matches a human’s choices does not mean it is the best for an artificial agent. An artificial agent that must interact with humans should, however, take into account how humans reason. For the rest of this chapter we assume utility theory as the basis for an artificial agent’s decision making and planning.

Whose Values?

Any computer program or person who acts or gives advice is using some value system to judge what is important and what is not.

Alice went on “Would you please tell me, please, which way I ought to go from here?”

“That depends a good deal on where you want to get to,” said the Cat.

“I don’t much care where –” said Alice.

“Then it doesn’t matter which way you go,” said the Cat.

Lewis Carroll (1832–1898)

Alice’s Adventures in Wonderland, 1865

We all, of course, want computers to work on our value system, but they cannot act according to everyone’s value system. When you build programs to work in a laboratory, this is not usually a problem. The program acts according to the goals and values of the program’s designer, who is also the program’s user. When there are multiple users of a system, you must be aware of whose value system is incorporated into a program. If a company sells a medical diagnostic program to a doctor, does the advice the program gives reflect the values of society, the company, the doctor, or the patient (all of whom may have very different value systems)? Does it determine the doctor’s or the patient’s values?

For autonomous cars, do the actions reflect the utility of the owner or the utility of society? Consider the choice between injuring people walking across the road or injuring family members by swerving to miss the pedestrians. How do the values of the lives trade off for different values of and , and different chances of being injured or killed? Drivers who most want to protect their family would have different trade-offs than the pedestrians. This situation has been studied using trolley problems where the tradeoffs are made explicit and people give their moral opinions.

If you want to build a system that gives advice to someone, you should find out what is true as well as what their values are. For example, in a medical diagnostic system, the appropriate procedure depends not only on patients’ symptoms but also on their priorities. Are they prepared to put up with some pain in order to be more aware of their surroundings? Are they willing to put up with a lot of discomfort to live a bit longer? What risks are they prepared to take? Always be suspicious of a program or person that tells you what to do if it does not ask you what you want to do! As builders of programs that do things or give advice, you should be aware of whose value systems are incorporated into the actions or advice. If people are affected, their preferences should be taken into account, or at least they should be aware of whose preferences are being used as a basis for decisions.