Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

8.5.1 Markov Chains

A Markov chain is a belief network with random variables in a sequence, where each variable only directly depends on its predecessor in the sequence. Markov chains are used to represent sequences of values, such as the sequence of states in a dynamic system or the sequence of words in a sentence. Each point in the sequence is called stage.

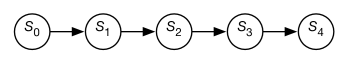

Figure 8.11 shows a generic Markov chain as a belief network. The network has 5 stages, but does not have to stop at stage ; it can extend indefinitely. The belief network conveys the independence assumption

which is called the Markov assumption.

Often the sequences are in time and, represents the state at time . Intuitively, conveys all of the information about the history that could affect the future states. The independence assumption of the Markov chain can be seen as “the future is conditionally independent of the past given the present.”

A Markov chain is a stationary model or time-homogenous model if the variables all have the same domain, and the transition probabilities are the same for each stage, i.e.,

To specify a stationary Markov chain, two conditional probabilities are provided:

-

•

specifies the initial conditions

-

•

specifies the dynamics, which is the same for each .

Stationary Markov chains are of interest for the following reasons:

-

•

They provide a simple model that is easy to specify.

-

•

The assumption of stationarity is often the natural model, because the dynamics of the world typically does not change in time. If the dynamics does change in time, it is usually because of some other feature that could also be modeled.

-

•

The network extends indefinitely. Specifying a small number of parameters gives an infinite network. You can ask queries or make observations about any arbitrary points in the future or the past.

Pagerank

Google’s initial search engine [Brin and Page, 1998] was based on Pagerank. Pagerank [Page et al., 1999] is a probability measure over web pages where the most influential web pages have the highest probability. It is based on a Markov chain of a random web surfer who starts on a random page, and with some probability picks a random page that is linked from the current page, and otherwise (if the current page has no outgoing links or with probability ) picks a page at random. The Markov chain is defined as follows:

-

•

The domain of is the set of all web pages.

-

•

is the uniform distribution of web pages: for each web page , where is the number of web pages.

-

•

The transition is defined as follows:

where there are web pages and there links on page . The way to think about this is that is the current web page, and is the next web page. If has no outgoing links, then is a page at random, which is the effect of the middle case. If has outgoing links, with probability the surfer picks a random page linked from , and otherwise picks a page at random.

-

•

is the probability someone picks a link on the current page.

This Markov chain converges to a distribution over web pages. Page et al. [1999] reported the search engine had converged to “a reasonable tolerance” for with 322 million links.

Pagerank provides a measure of influence. To get a high Pagerank, a web page should be linked from other pages with a high Pagerank. It is difficult, yet not impossible, to manipulate Pagerank for selfish reasons. One could try to artificially boost Pagerank for a specific page, by creating many pages that point to that page, but it is difficult for those referring pages to also have a high pagerank.

In the initial reported version, Brin and Page [1998] used 24 million web pages and 76 million links. The web is more complex now with many pages being dynamically generated, and search engines use much more sophisticated algorithms.

To determine the probability distribution of state , variable elimination can be used to sum out the preceding variables. The variables after are irrelevant to the probability of and need not be considered. To compute , where , only the variables between and need to be considered, and if , only the variables less than need to be considered.

A stationary distribution of a Markov chain is a distribution of the states such that if it holds at one time, it holds at the next time. Thus is a stationary distribution if for each state , . Thus,

A Markov chain is ergodic if, for any two states and in the domain of , there is a non-zero probability of eventually reaching from . A Markov chain is periodic with period if the difference between the times when it visits the same state is always divisible by . For example, consider the Markov chain with states the integers 0 to 9, and at each time it either adds 1 or adds 9 (modulo 10), each with probability 0.5. This Markov chain is periodic with period 2; if it starts in an even state at time 0, it will be in an even state at even times, and in an odd state at odd times. If the only period of a Markov chain is a period of 1, then the Markov chain is aperiodic.

If a Markov chain is ergodic and aperiodic, then there is a unique stationary distribution, and this is the equilibrium distribution that will be approached from any starting state. Thus for any distribution over , the distribution over will get closer and closer to the equilibrium distribution, as gets larger.