Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

3.5.2 Depth-First Search

In depth-first search, the frontier acts like a LIFO (last-in, first-out) stack of paths. In a stack, elements are added and removed from the top of the stack. Using a stack means that the path selected and removed from the frontier at any time is the last path that was added.

Example 3.9.

Consider the tree-shaped graph in Figure 3.6. Suppose the start node is the root of the tree (the node at the top). As depth-first search does not define the order of the neighbors, suppose for this graph that the children of each node are ordered from left to right, and they added to the stack in reverse order so that the path to the leftmost neighbor is added to the stack last (and so removed first).

In depth-first search, like breadth-first search, the order in which the paths are expanded does not depend on the goal. The nodes at the end of the first sixteen paths expanded are numbered in order of expansion in Figure 3.6. The shaded nodes are the nodes at the ends of the paths on the frontier after the first sixteen steps, assuming none of the expanded paths end at a goal node.

Notice how the first six paths expanded are all initial parts of a single path. The node at the end of this path has no neighbors. The next path expanded is a path that follows that path as long as possible and has one extra node.

Implementing the frontier as a stack results in paths being pursued in a depth-first manner – searching one path to its completion before trying an alternative path. This method is said to involve backtracking: the algorithm selects a first alternative at each node, and it backtracks to the next alternative when it has pursued all of the paths from the first selection. Some paths may be infinite when the graph has cycles or infinitely many nodes, in which case a depth-first search may never stop.

This algorithm does not specify the order in which the paths to the neighbors are added to the frontier. The efficiency of the algorithm is sensitive to this ordering.

Example 3.10.

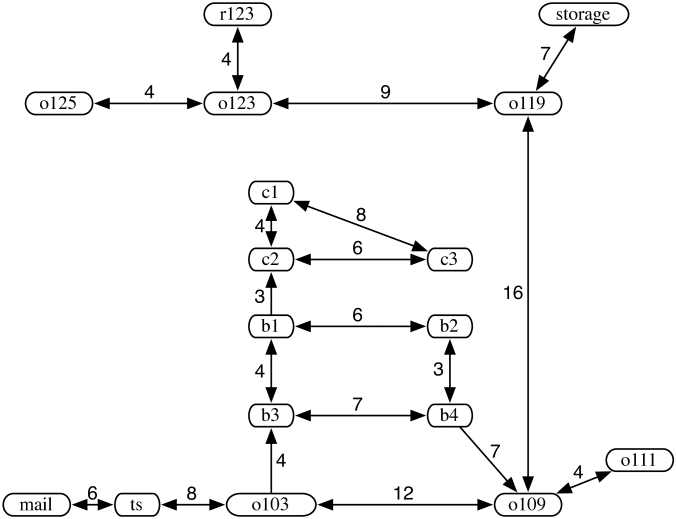

Consider depth-first search from to in the graph given in Figure 3.2. In this example, the frontier is shown as a list of paths with the top of the stack at the left of the list.

Initially, the frontier contains the trivial path .

At the next stage, the frontier contains the paths:

Next, the path is selected because it is at the top of the stack. It is removed from the frontier and replaced by extending it by one arc, resulting in the frontier

Next, the path is removed from the frontier and is replaced by the set of paths that extend it by one arc, which is the empty set because mail has no neighbors. Thus, the resulting frontier is

At this stage, the path is the top of the stack. Notice what has happened: depth-first search has pursued all paths from ts and, when all of these paths were exhausted (there was only one), it backtracked to the next element of the stack. Next, is selected and is replaced in the frontier by the paths that extend it by one arc, resulting in the frontier

Then is selected from the frontier and is replaced by all one-arc extensions, resulting in the frontier

Now the first path is selected from the frontier and is extended by one arc, resulting in the frontier

Node has no neighbors, and thus the search backtracks to the last alternative that has not been pursued, namely to the path to .

Eventually, it will find a path to which goes through .

Suppose is the path selected on line 13 of Figure 3.4. In depth-first search every other path on the frontier is of the form , for some index and some node that is a neighbor of ; that is, it follows the selected path for a number of arcs and then has exactly one extra node. Thus, the frontier contains only the current path and paths to neighbors of the nodes on this path. If the branching factor is and the selected path on the frontier has arcs, there can be at most other paths on the frontier. These are the up to alternative paths from each node. Therefore, for depth-first search, the space used at any stage is linear in the number of arcs from the start to the current node.

Comparing Algorithms

Algorithms (including search algorithms) can be compared on

-

•

the time taken,

-

•

the space used, and

-

•

the quality or accuracy of the results.

The time taken, space used, and accuracy of an algorithm are a function of the inputs to the algorithm. Computer scientists talk about the asymptotic complexity of algorithms, which specifies how the time or space grows with the input size of the algorithm. An algorithm has time (or space) complexity – read “big-oh of ” – for input size , where is some function of , if there exist constants and such that the time, or space, of the algorithm is less than for all . The most common types of functions are exponential functions such as , , or ; polynomial functions such as , , , or ; and logarithmic functions, . In general, exponential algorithms get worse more quickly than polynomial algorithms which, in turn, are worse than logarithmic algorithms.

An algorithm has time or space complexity for input size if there exist constants and such that the time or space of the algorithm is greater than for all . An algorithm has time or space complexity if it has complexity and . Typically, you cannot give a complexity on an algorithm, because most algorithms take different times for different inputs. Thus, when comparing algorithms, one has to specify the class of problems that will be considered.

Algorithm is better than , using a measure of either time, space, or accuracy, could mean any one of:

-

•

the worst case of is better than the worst case of

-

•

works better in practice, or the average case of is better than the average case of , where you average over typical problems

-

•

there is a subclass of problems for which is better than , so that which algorithm is better depends on the problem

-

•

for every problem, is better than .

The worst-case asymptotic complexity is often the easiest to show, but it is usually the least useful. Characterizing the subclass of problems for which one algorithm is better than another is usually the most useful, if it is easy to determine which subclass a given problem is in. Unfortunately, this characterization is usually very difficult to obtain.

Characterizing when one algorithm is better than the other can be done either theoretically using mathematics or empirically by building implementations. Theorems are only as valid as the assumptions on which they are based. Similarly, empirical investigations are only as good as the suite of test cases and the actual implementations of the algorithms. It is easy to disprove a conjecture that one algorithm is better than another for some class of problems by showing a counterexample, but it is usually much more difficult to prove such a conjecture.

If there is a solution on the first branch searched, the time complexity is linear in the number of arcs in the path. In the worst case, depth-first search can get trapped on infinite branches and never find a solution, even if one exists, for infinite graphs or for graphs with cycles. If the graph is a finite tree, with the forward branching factor less than or equal to and with all paths from the start having or fewer arcs, the worst-case time complexity is .

Example 3.11.

Consider the modification of the delivery graph presented in Figure 3.7, in which the agent has much more freedom in moving between locations. An infinite path leads from ts to mail, back to ts, back to mail, and so forth. As presented, depth-first search follows this path forever, never considering alternative paths from or . The frontiers for the first five iterations of the path-finding search algorithm using depth-first search are

Depth-first search can be improved by pruning paths with cycles.

Because depth-first search is sensitive to the order in which the neighbors are added to the frontier, care must be taken to do it sensibly. This ordering may be done statically (so that the order of the neighbors is fixed) or dynamically (where the ordering of the neighbors depends on the goal).

Depth-first search is appropriate when

-

•

space is restricted

-

•

many solutions exist, perhaps with long paths, particularly for the case where nearly all paths lead to a solution or

-

•

the order in which the neighbors of a node are added to the stack can be tuned so that solutions are found on the first try.

It is a poor method when

-

•

it is possible to get caught in infinite paths, which occurs when the graph is infinite or when there are cycles in the graph

-

•

solutions exist at shallow depth, because in this case the search may explore many long paths before finding the short solutions, or

-

•

there are multiple paths to a node, for example, on a grid, where all arcs go right or down, there are exponentially paths from the top-left node, but only nodes.

Depth-first search is the basis for a number of other algorithms, such as iterative deepening.