Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

2.7 Exercises

-

1.

The start of Section 2.3 argued that it was impossible to build a representation of a world independently of what the agent will do with it. This exercise lets you evaluate this argument.

Choose a particular world, for example, the things on top of your desk right now.

-

(a)

Get someone to list all of the individuals (things) that exist in this world (or try it yourself as a thought experiment).

-

(b)

Try to think of twenty individuals that they missed. Make these as different from each other as possible. For example, the ball at the tip of the rightmost ball-point pen on the desk, the part of the stapler that makes the staples bend, or the third word on page 1b of a particular book on the desk.

-

(c)

Try to find an individual that cannot be described using your natural language (such as a particular component of the texture of the desk).

-

(d)

Choose a particular task, such as making the desk tidy, and try to write down all of the individuals in the world at a level of description relevant to this task.

Based on this exercise, discuss the following statements.

-

(a)

What exists in a world is a property of the observer.

-

(b)

We need ways to refer to individuals other than expecting each individual to have a separate name.

-

(c)

Which individuals exist is a property of the task as well as of the world.

-

(d)

To describe the individuals in a domain, you need what is essentially a dictionary of a huge number of words and ways to combine them, and this should be able to be done independently of any particular domain.

-

(a)

-

2.

Consider the top level controller of Example 2.6

-

(a)

If the lower level reach the timeout without getting to the target position, what does the agent do?

-

(b)

The definition of the target position means that, when the plan ends, the top level stops. This is not reasonable for the robot that can only change directions and cannot stop. Change the definition so that the robot keeps going.

-

(a)

-

3.

The obstacle avoidance implemented in Example 2.5 can easily get stuck.

-

(a)

Show an obstacle and a target for which the robot using the controller of Example 2.5 would not be able to get around (and it will crash or loop).

-

(b)

Even without obstacles, the robot may never reach its destination. For example, if the robot is close to its target position, but not close enough to have arrived, it may keep circling forever without reaching its target. Design a controller that can detect this situation and find its way to the target.

-

(a)

-

4.

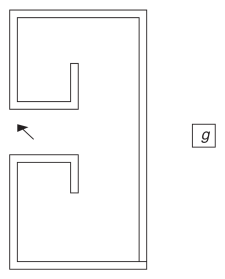

Consider the “robot trap” in Figure 2.11.

Figure 2.11: A robot trap -

(a)

This question is to explore why it is so tricky for a robot to get to location . Explain what the current robot does. Suppose one was to implement a robot that follows the wall using the “right-hand rule”: the robot turns left when it hits an obstacle and keeps following a wall, with the wall always on its right. Is there a simple characterization of the situations in which the robot should keep following this rule or head towards the target?

-

(b)

An intuition of how to escape such a trap is that, when the robot hits a wall, it follows the wall until the number of right turns equals the number of left turns. Show how this can be implemented, explaining the belief state, and the functions of the layer.

-

(a)

-

5.

If the current target location were to be moved, the middle layer of Example 2.5 travels to the original position of that target and does not try to go to the new position. Change the controller so that the robot can adapt to targets moving.

-

6.

The current controller visits the locations in the to_do list sequentially.

-

(a)

Change the controller so that it is opportunistic; when it selects the next location to visit, it selects the location that is closest to its current position. It should still visit all the locations.

-

(b)

Give one example of an environment in which the new controller visits all the locations in fewer time steps than the original controller.

-

(c)

Give one example of an environment in which the original controller visits all the locations in fewer time steps than the modified controller.

-

(d)

Change the controller so that, at every step, the agent heads toward whichever target location is closest to its current position.

-

(e)

Can the controller from part (d) get stuck and never reach a target in an example where the original controller will work? Either give an example in which it gets stuck and explain why it cannot find a solution, or explain why it gets to a goal whenever the original can.

-

(a)

-

7.

Change the controller so that the robot senses the environment to determine the coordinates of a location. Assume that the body can provide the coordinates of a named location.

-

8.

Suppose the robot has a battery that must be charged at a particular wall socket before it runs out. How should the robot controller be modified to allow for battery recharging?

-

9.

Suppose you have a new job and must build a controller for an intelligent robot. You tell your bosses that you just have to implement a command function and a state transition function. They are very skeptical. Why these functions? Why only these? Explain why a controller requires a command function and a state transition function, but not other functions. Use proper English. Be concise.