Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

10.3.2 Hidden Variables

The next simplest case is where the model is given, but not all variables are observed. A hidden variable or a latent variable is a variable in a belief network whose value is not observed for any of the examples. That is, there is no column in the data corresponding to that variable.

| Model | Data | ➪ | Probabilities |

|---|---|---|---|

|

Example 10.12.

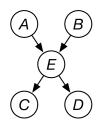

Figure 10.8 shows a typical case. Assume that all the variables are binary. The model contains a hidden variable that is in the model but not the data set. The aim is to learn the parameters of the model that includes the hidden variable . There are 10 parameters to learn.

Note that, if was not part of the model, the algorithm would have to learn , , , , which has 14 parameters. The reason for introducing hidden variables is, paradoxically, to make the model simpler and, therefore, less prone to overfitting.

The expectation maximization or EM algorithm for learning belief networks with hidden variables is essentially the same as the EM algorithm for clustering. The E step, shown in Figure 10.9, involves probabilistic inference for each example to infer the probability distribution of the hidden variable(s) given the observed variables for that example. The M step of inferring the probabilities of the model from the augmented data is the same as in the fully observable case discussed in the previous section, but, in the augmented data, the counts are not necessarily integers.

|

|

|