Artificial

Intelligence 2E

foundations of computational agents

The third edition of Artificial Intelligence: foundations of computational agents, Cambridge University Press, 2023 is now available (including full text).

11.2.2 Extensive Form of a Game

Whereas the normal form of a game represents controllers as single units, it is often more natural to specify the unfolding of a game through time. The extensive form of a game is an extension of a single-agent decision tree. We first give a definition that assumes the game is fully observable (called perfect information in game theory).

A perfect-information game in extensive form or a game tree is a finite tree where the nodes are states and the arcs correspond to actions by the agents. In particular:

-

•

Each internal node is labeled with an agent (or with nature). The agent is said to control the node.

-

•

Each arc out of a node labeled with agent corresponds to an action for agent .

-

•

Each internal node labeled with nature has a probability distribution over its children.

-

•

The leaves represent final outcomes and are labeled with a utility for each agent.

The extensive form of a game specifies a particular unfolding of the game. Each path to a leaf, called a run, specifies one particular way that the game could proceed depending on the choices of the agents and nature.

A strategy for agent is a function from nodes controlled by agent into actions. That is, a strategy selects a child for each node that agent controls. A strategy profile consists of a strategy for each agent.

Example 11.2.

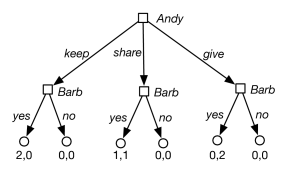

Consider a sharing game where there are two agents, Andy and Barb, and there are two identical items to be divided between them. Andy first selects how they will be divided: Andy keeps both items, they share and each person gets one item, or he gives both items to Barb. Then Barb gets to either reject the allocation and they both get nothing, or accept the allocation and they both get the allocated amount.

The extensive form of the sharing game is shown in Figure 11.2. Andy has 3 strategies. Barb has strategies; one for each combination of assignments to the nodes she controls. As a result, there are 24 strategy profiles.

Given a strategy profile, each node has a utility for each agent. The utility for an agent at a node is defined recursively from the bottom up:

-

•

The utility for each agent at a leaf is given as part of the leaf.

-

•

The utility for agent of a node controlled by that agent is the utility for the agent of the child node that is selected by agent’s strategy.

-

•

The utility for agent of a node controlled by another agent is the utility for agent of the child node that is selected by agent ’s strategy.

-

•

The utility for agent for a node controlled by nature is the expected value of the utility for agent of the children. That is, , where the sum is over the children of node , and is the probability that nature will choose child .

Example 11.3.

In the sharing game, suppose we have the following strategy profile: Andy chooses and Barb chooses , , for each of the nodes she gets to choose for. Under this strategy profile, the utility for Andy at the leftmost internal node is , the utility for Andy at the center internal node is , and the utility for Andy at the rightmost internal node is . The utility for Andy at the root is .

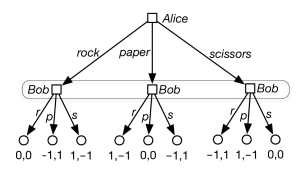

The preceding definition of the extensive form of a game assumes that the agents can observe the state of the world (i.e., at each stage they know which node they are at). This means that the state of the game must be fully observable. In a partially observable game or an imperfect-information game, the agents do not necessarily know the state of the world when they have to decide what to do. This includes simultaneous action games where more than one agent needs to decide what to do at the same time. In such cases, the agents do not know which node they are at in the game tree. To model these games the extensive form of a game is extended to include information sets. An information set is a set of nodes, all controlled by the same agent and all with the same set of available actions. The idea is that the agent cannot distinguish the elements of the information set. The agent only knows the game state is at one of the nodes in the information set, not which node. In a strategy, the agent chooses one action for each information set; the same action is carried out at each node in the information set. Thus, in the extensive form, a strategy specifies a function from information sets to actions.

Example 11.4.

Figure 11.3 gives the extensive form for the rock-paper-scissors game of Example 11.1. The elements of the information set are in a rounded rectangle. In a strategy, Bob must treat each node in the information set the same. When Bob gets to choose his action, he does not know which action Alice has chosen.